Questions Asked During the Presentation Websockets For Real-time And Interactive Interfaces At Code4lib 2014

Published: 2014-04-07 19:30 EDT

During my presentation on WebSockets, there were a couple points where folks in the audience could enter text in an input field that would then show up on a slide. The data was sent to the slides via WebSockets. It is not often that you get a chance to incorporate the technology that you’re talking about directly into how the presentation is given, so it was a lot of fun. At the end of the presentation, I allowed folks to anonymously submit questions directly to the HTML slides via WebSockets.

I ran out of time before I could answer all of the questions that I saw. I’ll try to answer them now.

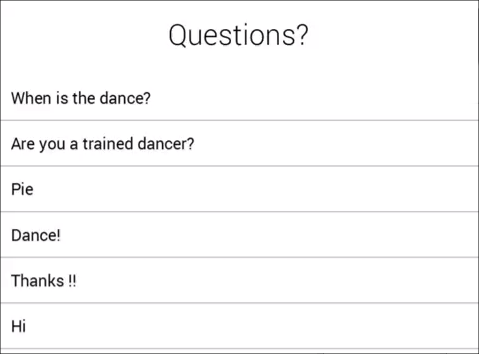

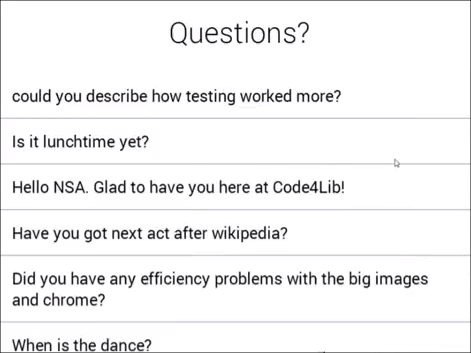

Questions From Slides

You can see in the YouTube video at the end of my presentation (at 1h38m26s) the following questions came in. ([Full presentation starts here[(https://www.youtube.com/watch?v=_8MJATYsqbY&feature=share&t=1h25m37s).) Some lines that came in were not questions at all. For those that are really questions, I’ll answer them now, even if I already answered them.

Are you a trained dancer?

No. Before my presentation I was joking with folks about how little of a presentation I’d have, at least for the interactive bits, if the wireless didn’t work well enough. Tim Shearer suggested I just do an interpretive dance in that eventuality. Luckily it didn’t come to that.

When is the dance?

There was no dance. Initially I thought the dance might happen later, but it didn’t. OK, I’ll admit it, I was never going to dance.

Did you have any efficiency problems with the big images and chrome?

On the big video walls in Hunt Library we often use Web technologies to create the content and Chrome for displaying it on the wall. For the most part we don’t have issues with big images or lots of images on the wall. But there’s a bit of trick happening here. For instance when we display images for My #HuntLibrary on the wall, they’re just images from Instagram so only 600x600px. We initially didn’t know how these would look blown up on the video wall, but they end up looking fantastic. So you don’t necessarily need super high resolution images to make a very nice looking display.

Upstairs on the Visualization Wall, I display some digitized special collections images. While the possible resolution on the display is higher, the current effective resolution is only about 202px wide for each MicroTile. The largest image is then only 404px side. In this case we are also using a Djatoka image server to deliver the images. Djatoka has an issue with the quality of its scaling between quality levels where the algorithm chosen can make the images look very poor. How I usually work around this is to pick the quality level that is just above the width required to fit whatever design. Then the browser scales the image down and does a better job making it look OK than the image server would. I don’t know which of these factors effect the look on the Visualization Wall the most, but some images have a stair stepping look on some lines. This especially effects line drawings with diagonal lines, while photographs can look totally acceptable. We’ll keep looking for how to improve the look of images on these walls especially in the browser.

Have you got next act after Wikipedia?

This question is referring to the adaptation of Listen to Wikipedia for the Immersion Theater. You can see video of what this looks like on the big Hunt Library Immersion Theater wall.

I don’t currently have solid plans for developing other content for any of the walls. Some of the work that I and others in the Libraries have done early on has been to help see what’s possible in these spaces and begin to form the cow paths for others to produce content more easily. We answered some big questions. Can we deliver content through the browser? What templates can we create to make this work easier? I think the next act is really for the NCSU Libraries to help more students and researchers to publish and promote their work through these spaces.

Is it lunchtime yet?

In some time zone somewhere, yes. Hopefully during the conference lunch came soon enough for you and was delicious and filling.

Could you describe how testing worked more?

I wish I could think of some good way to test applications that are destined for these kinds of large displays. There’s really no automated testing that is going to help here. BrowserStack doesn’t have a big video wall that they can take screenshots on. I’ve also thought that it’d be nice to have a webcam trained on the walls so that I could make tweaks from a distance.

But Chrome does have its screen emulation developer tools which were super helpful for this kind of work. These kinds of tools are useful not just for mobile development, which is how they’re usually promoted, but for designing for very large displays as well. Even on my small workstation monitor I could get a close enough approximation of what something would look like on the wall. Chrome will shrink the content to fit to the available viewport size. I could develop for the exact dimensions of the wall while seeing all of the content shrunk down to fit my desktop. This meant that I could develop and get close enough before trying it out on the wall itself. Being able to design in the browser has huge advantages for this kind of work.

I work at DH Hill Library while these displays are in Hunt Library. I don’t get over there all that often, so I would schedule some time to see the content on the walls when I happened to be over there for a meeting. This meant that there’d often be a lag of a week or two before I could get over there. This was acceptable as this wasn’t the primary project I was working on.

By the time I saw it on the wall, though, we were really just making tweaks for design purposes. We wanted the panels to the left and right of the Listen to Wikipedia visualization to fall along the bezel. We would adjust font sizes for how they felt once you’re in the space. The initial, rough cut work of modifying the design to work in the space was easy, but getting the details just right required several rounds of tweaks and testing. Sometimes I’d ask someone over at Hunt to take a picture with their phone to ensure I’d fixed an issue.

While it would have been possible for me to bring my laptop and sit in front of the wall to work, I personally didn’t find that to work well for me. I can see how it could work to make development much faster, though, and it is possible to work this way.

Race condition issues between devices?

Some spaces could allow you to control a wall from a kiosk and completely avoid any possibility of a race condition. When you allow users to bring their own device as a remote control to your spaces you have some options. You could allow the first remote to connect and lock everyone else out for a period of time. Because of how subscriptions and presence notifications work this would certainly be possible to do.

For Listen to Wikipedia we allow more than one user to control the wall at the same time. Then we use WebSockets to try to keep multiple clients in sync. Even though we attempt to quickly update all the clients, it is certainly possible that there could be race conditions, though it seems unlikely. Because we’re not dealing with persisting data, I don’t really worry about it too much. If one remote submits just after another but before it is synced, then the wall will reflect the last to submit. That’s perfectly acceptable in this case. If a client were to get out of sync with what is on the wall, then any change by that client would just be sent to the wall as is. There’s no attempt to make sure a client had the most recent, freshest version of the data prior to submitting.

While this could be an issue for other use cases, it does not adversely effect the experience here. We do an alright job keeping the clients in sync, but don’t shoot for perfection.

How did you find the time to work on this?

At the time I worked on these I had at least a couple other projects going. When waiting for someone else to finish something before being able to make more progress or on a Friday afternoon, I’d take a look at one of these projects for a little. It meant the progress was slow, but these also weren’t projects that anyone was asking to be delivered on a deadline. I like to have a couple projects of this nature around. If I’ve got a little time, say before a meeting, but not enough for something else, I can pull one of these projects out.

I wonder, though, if this question isn’t more about the why I did these projects. There were multiple motivations. A big motivation was to learn more about WebSockets and how the technology could be applied in the library context. I always like to have a reason to learn new technologies, especially Web technologies, and see how to apply them to other types of applications. And now that I know more about WebSockets I can see other ways to improve the performance and experience of other applications in ways that might not be as overt in their use of the technology as these project were.

For the real-time digital collections view this is integrated into an application I’ve developed and it did not take much to begin adding in some new functionality. We do a great deal of business analytic tracking for this application. The site has excellent SEO for the kind of content we have. I wanted to explore other types of metrics of our success.

The video wall projects allowed us to explore several different questions. What does it take to develop Web content for them? What kinds of tools can we make available for others to develop content? What should the interaction model be? What messaging is most effective? How should we kick off an interaction? Is it possible to develop bring your own device interactions? All of these kinds of questions will help us to make better use of these kinds of spaces.

Speed of an unladen swallow?

I think you’d be better off asking a scientist or a British comedy troupe.

Questions From Twitter

Mia (@mia_out) tweeted at 11:47 AM on Tue, Mar 25, 2014

@ostephens @ronallo out of curiosity, how many interactions compared to visitor numbers? And in-app or relying on phone reader?

sebchan (@sebchan) tweeted at 0:06 PM on Tue, Mar 25, 2014

@ostephens @ronallo (but) what are the other options for ‘interacting’?

This question was in response to how 80% of the interactions with the Listen to Wikipedia application are via QR code. We placed a URL and QR code on the wall for Listen to Wikipedia not knowing which would get the most use.

Unfortunately there’s no simple way I know of to kick off an interaction in these spaces when the user brings their own device. Once when there was a stable exhibit for a week we used a kiosk iPad to control a wall so that the visitor did not need to bring a device. We are considering how a kiosk tablet could be used more generally for this purpose. In cases where the visitor brings their own device it is more complicated. The visitor either must enter a URL or scan a QR code. We try to make the URLs short, but because we wanted to use some simple token authentication they’re at least 4 characters longer than they might otherwise be. I’ve considered using geolocation services as the authentication method, but they are not as exact as we might want them to be for this purpose, especially if the device uses campus wireless rather than GPS. We also did not want to have a further hurdle of asking for permission of the user and potentially being rejected. For the QR code the visitor must have a QR code reader already on their device. The QR code includes the changing token. Using either the URL or QR code sends the visitor to a page in their browser.

Because the walls I’ve placed content on are in public spaces there is no good way to know how many visitors there are compared to the number of interactions. One interesting thing about the Immersion Theater is that I’ll often see folks standing outside of the opening to the space looking in, so even if there where some way to track folks going in and out of the space, that would not include everyone who has viewed the content.

Other Questions

If you have other questions about anything in my presentation, please feel free to ask. (If you submit them through the slides I won’t ever see them, so better to email or tweet at me.)